Tulsa AI Learning Lab

Session 1:

Product Engineering for AI

🤝 Quick Intro

Presenter

Kaitlin Cort

> Software Engineer (AI Platform) @ AidKit.org

> Background: data science for public policy, New York State Assembly

> New member: Tulsa Remote

Connect: kaitlin.cort@owasp.org

🚀 What we'll cover:

- Intro to AI & Product Engineering

- Basic concepts: Generative AI and LLMs

- Evolving role of software engineers

- AI Product Development Lifecycle

- System Architecture: RAG Pipeline with Agents

- Hands-on: Deploying a tiny LLM (Qwen2.5) on AWS EC2

- Discussion: Unique challenges for building with AI and opportunities in Tulsa

Basic Terms & Concepts

Large Language Models (LLMs): AI systems trained on massive text datasets to understand, summarize, generate, and predict human language.

Embeddings: Numerical representations of words in high-dimensional space.

Tokens: The basic units LLMs process (parts of words or characters).

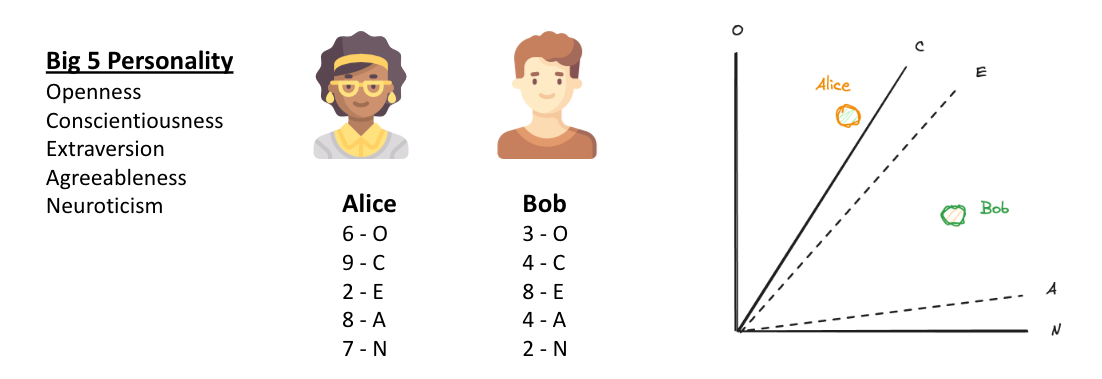

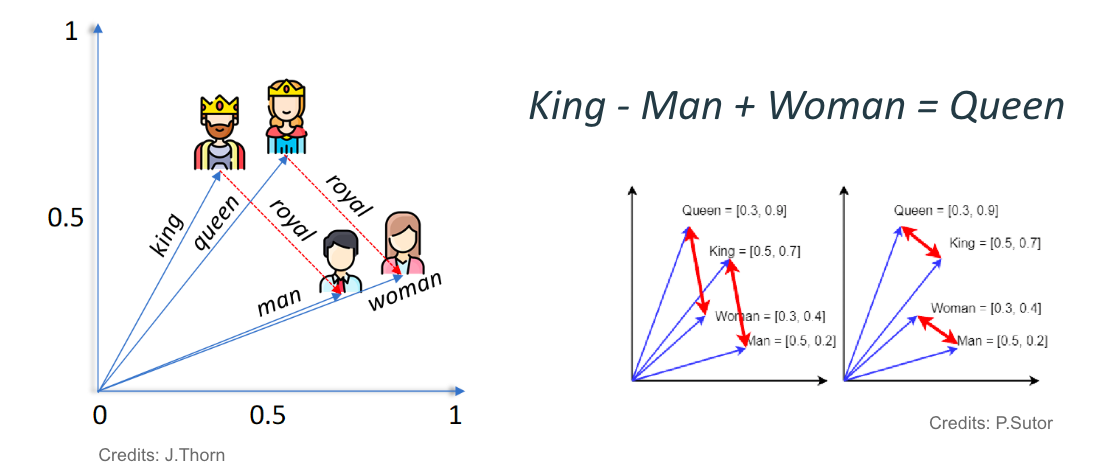

Embeddings

Coordinates for words and concepts.

Imagine visualizing Alice and Bob as personalities in a 5-dimensional space.

Embeddings

Vector composition allows for computation.

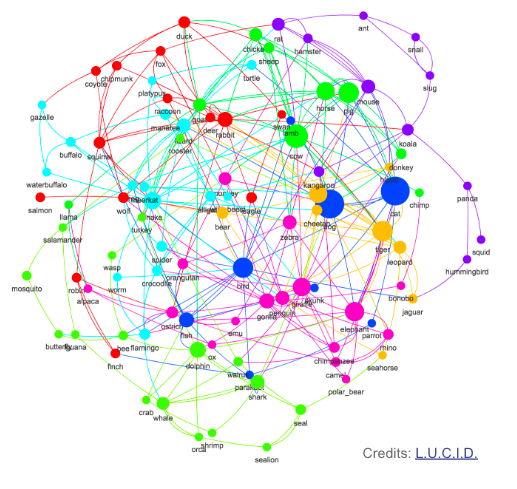

Semantic Network

OpenAI Embeddings: text-embedding-ada-002

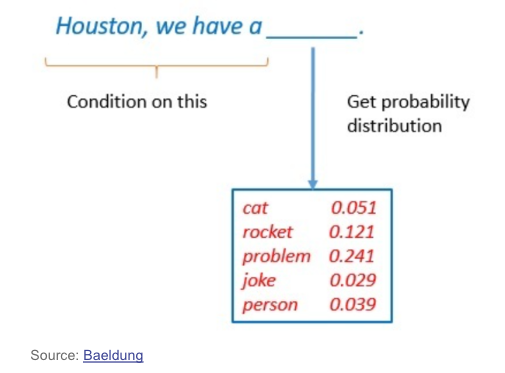

Probability

Predict the next word (or pixel)

Basic Terms & Concepts

Generative AI: A subset of artificial intelligence that uses sophisticated algorithms and machine learning models to create novel content, such as text, images, videos, music, or voices.

Relationship between AI disciplines and where Generative AI fits

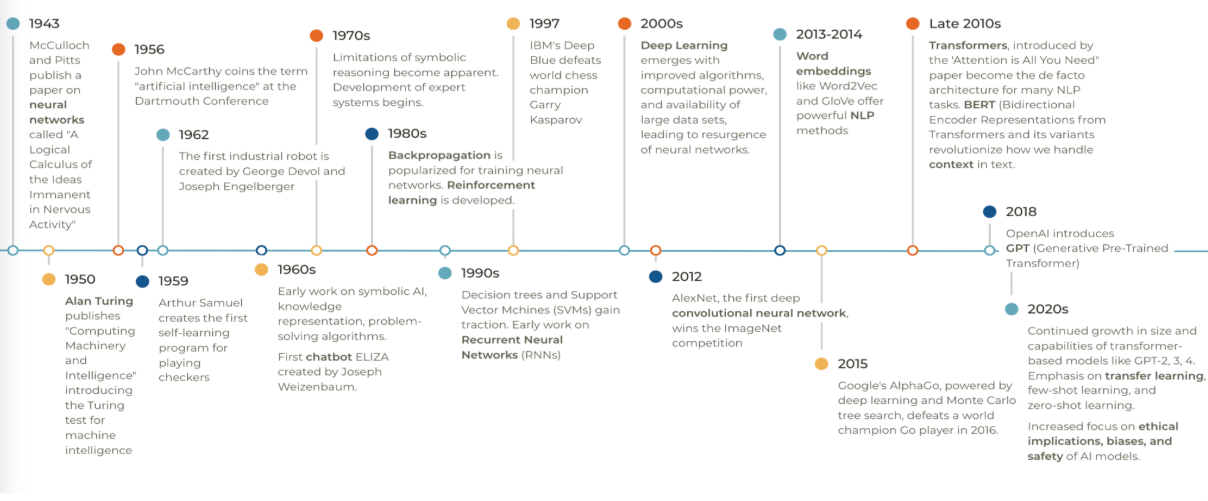

History of A.I.

Timeline of AI research and development

This A.I. Moment

Computational Power: Hardware acceleration - Graphical Processing Units (GPU) & Tensor Processing Units (TPU) makes training very large models feasible.

Availability of Data: Decades of "big data" from social media and web allows models to learn patterns and relationships.

Deep Learning Renaissance: Transformer architecutre (2017) attention mechanisms allow models to focus on relevant parts of input data, for scalable and efficient ways to handle sequences.

Bigger is in fact, better.

Opportunities in A.I.

Industries Being Transformed

- 🏥 Healthcare: diagnostics, drug discovery, patient care

- 🏦 Finance: risk assessment, fraud detection, investments

- 🏭 Manufacturing: predictive maintenance, quality control

- 🚚 Logistics: route optimization, inventory management

- 🛍️ Retail: personalization, demand forecasting

- 🏢 Real Estate: valuation, market analysis

- 👩⚖️ Legal: document analysis, compliance monitoring

- 🎓 Education: personalized learning, assessment

Tulsa

✈️ Aerospace & Defense: Advanced manufacturing, predictive maintenance, autonomous systems, supply chain

⛽ Energy: AI for oil & gas optimization, renewable forecasting, smart grid

🏭 Real Estate & Retail: Customer service, fraud detection, smart building management

🚚 Logistics: Route optimization for central U.S. hub, warehouse automation, data centers

🏛️ GovTech & Philanthropy: Service modernization, data-driven policy, community engagement, participatory AI governance

AI is projected to create $15.7 trillion in global economic value by 2030 (PwC)

Changing Role of Software Engineers

🤖 AI Integration: Developing architectures that effectively combine traditional code with AI capabilities while maintaining reliability.

💡 Product-Focused Engineering: Understanding user needs and business value becomes critical as fewer engineers are needed to build a product.

🔄 Adaptive System Design: Creating flexible architectures that can evolve with rapidly improving AI capabilities and changing user expectations.

🛡️ Evaluation & Guardrails: Creating robust testing frameworks and safety measures for systems that may exhibit unpredictable behaviors.

📊 Data Engineering: Growing emphasis on high-quality data pipelines, synthetic data generation, and efficient vector storage solutions.

Software engineers are becoming AI orchestrators and product strategists, rather than just code developers.

What is AI Product Engineering?

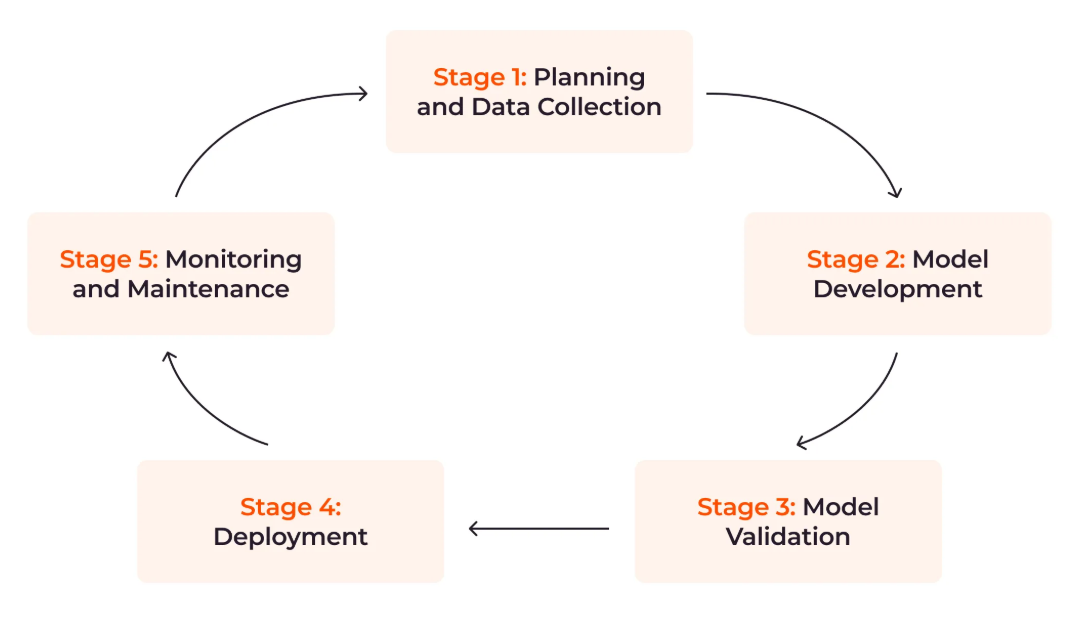

Key Stages

- 1. User-centered design: problem identification & requirements analysis

- 2. Data collection & Preparation

- 3. Model training & Development

- 4. Model evaluation: Testing and Refinement

- 5. Deployment & Integration

- 6. Monitoring & Improvement

The AI Development Lifecycle

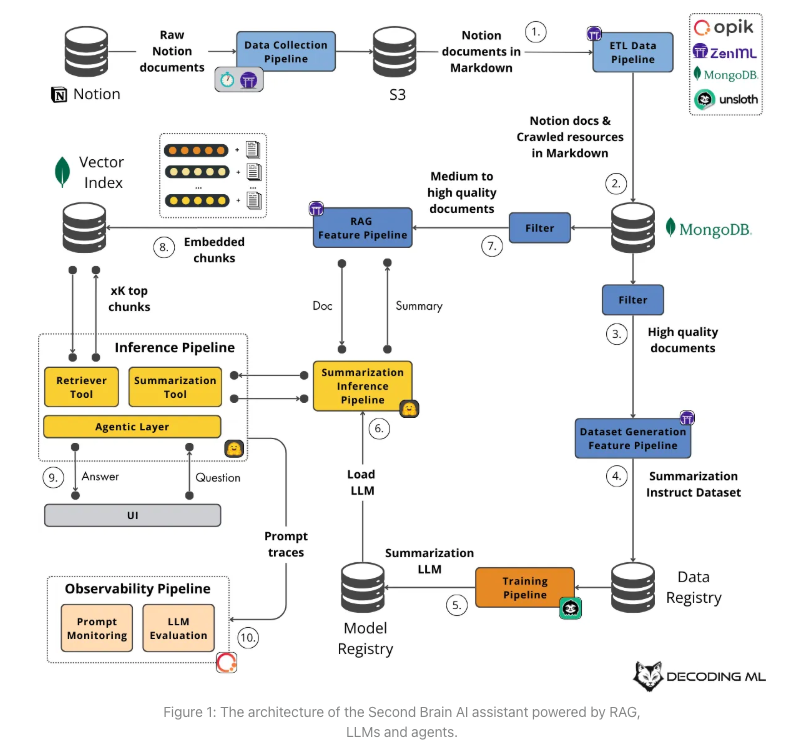

AI System Architecture

Components

- Core LLM: Meta Llama 3.1 8B (fine-tuned for summarization)

- Vector DB: MongoDB Vector Search for embeddings & metadata

- Data pipelines: Notion Collector, ETL Crawler, Document DB, Data Registry

- RAG pipeline: Langchain, semantic retrieval at query time

- Agent: HF SmolAgents: retrieval + summarization + synthesis

- Orchestration: ZenML (offline), Gradio, HF endpoints - REST deployments (online)

- Observability: Opik for prompt monitoring and LLM evaluation

Model Evaluation in Production

Metrics to Consider

- Accuracy/quality of outputs

- Latency and throughput

- Cost per inference

- Hallucination rate

- Ethical considerations

- User satisfaction

Monitoring Tools

- CloudWatch metrics

- A/B testing

- User feedback loops

- Regression testing

- Prompt evaluation frameworks: Evidently, Opik, Helicone

- LangSmith tracing & evaluation

- ArizeAI for drift detection, FiddlerAI for performance

- Giskard bias and hallucination monitoring

- MLflow for model versioning

Unique Challenges

Technical Challenges

- Data quality and availability

- Model performance and reliability

- Compute resources and costs

- Deployment and scaling

- Data privacy and security

- Keeping up with the latest models and techniques

- AI is eating the world!

Number One challenge...

Ethical Challenges

- Bias and fairness

- Transparency and explainability

- Privacy concerns

- Responsible AI usage & governance: copyright, deepfakes, misinformation

- Workforce displacement

- Environmental impact

Hands-on Activity

Deploying a Tiny LLM

We'll deploy SmolLm2 and Qwen2.5, small but powerful LLMs.

Using:

- AWS EC2 (Free Tier)

- Ollama - Open source tool for running models locally

Next Steps

Resources

Coming in Session 2

- AWS Bedrock for simplified AI orchestration

- Edge AI deployment on Raspberry Pi

- Environmental sensor integration

- RAG patterns for knowledge augmentation

Thank You!